Talking to AI Without Exposing Your Data: Lessons from the Privacy Minefield

Reach out by submitting

the form below

Contact Information

Whether you are looking for a full-scale system or quick advice, we are always happy to help.

Reach out. We will make it worth your time.

Phone

Let's Talk

Ready to chat? Click the button below to book a time that suits you.

Book A Call Book A Call

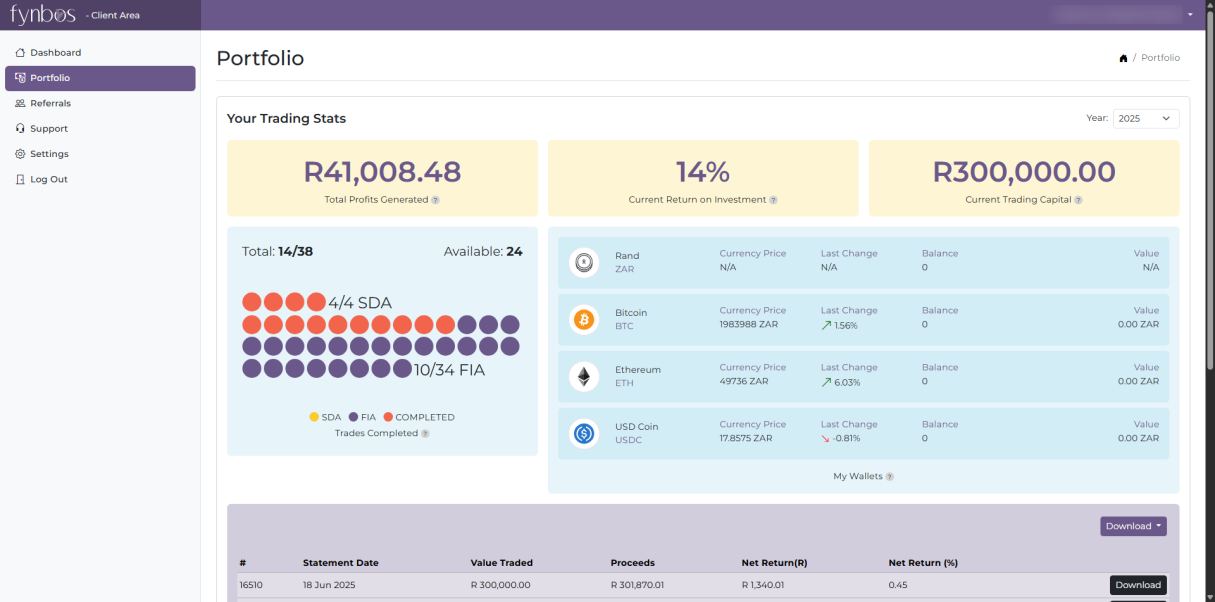

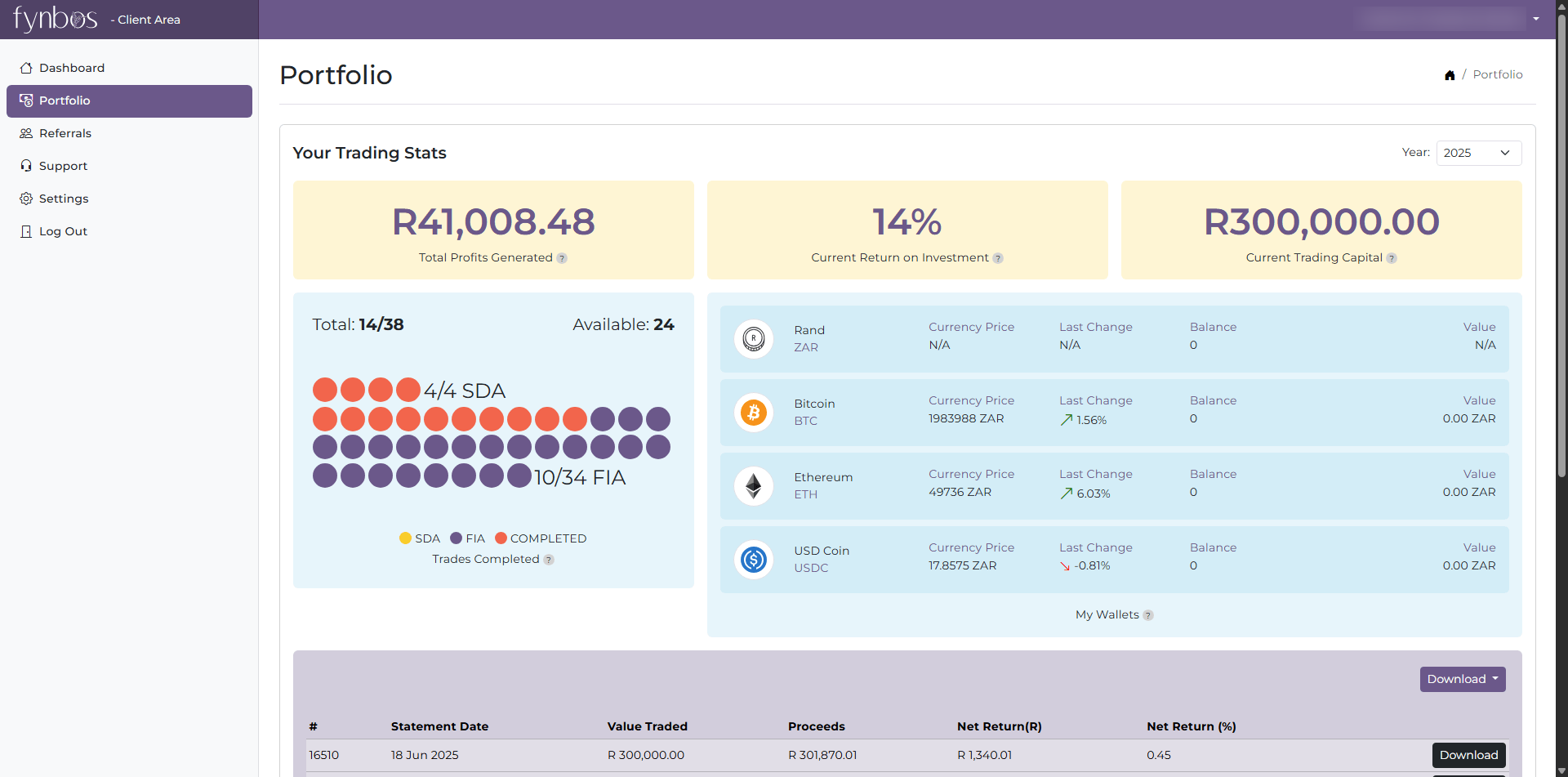

Our Latest Projects

Here's What We've Been Up To Lately

work process

See the value in

our Development

Process

01Step

Project Discovery

Our Project Managers collaborate with your team to fully understand your unique needs.

02Step

Strategy Development

We formulate a proposal outlining exactly how we see the project panning out as a whole or in phases.

03Step

Implementing the solution

Our very transparent process ensures clients are kept informed regarding timeframes and budget throughout the project.

04Step

Continuous Monitoring

We work with clients to continuously monitor progress as well as changes to all environments.

Book a call with one of our Project Managers today to see how

Teruza can assist you with your development needs and ultimately boost

your projects potential.

Teruza can assist you with your development needs and ultimately boost

your projects potential.